Reporting the Machines: A Conference on AI, Democracy, and the Future of Journalism

With "Reporting the Machines“, Publix has created a platform where experts from journalism, academia, civil society, politics, and technology come together to discuss how artificial intelligence is transforming our lives, the public sphere, and democratic processes, as well as the responsibilities journalists bear in this change.

The conference is part of the Publix Tech Journalism Fellowship, funded by the Federal Government Commissioner for Culture and the Media and realized in cooperation with MIZ Babelsberg and 1014 Germany. “The more far-reaching the societal changes brought about by the use of AI, the more important it becomes for decision-makers and the public to develop an informed and critical approach to the technology. The conference demonstrated how journalism, as well as science and civil society, can contribute to this, and in how many diverse ways they are already doing so,” said Sarah Luisa Thiele, Head of MIZ Babelsberg.

Publix Director Maria Exner opened the conference with a clear call to create more spaces like this: spaces where systems, companies, and power structures behind AI can be critically discussed, along with the societal impacts they entail. The new fellowship aims to strengthen informed, independent reporting on technological developments. The high number of applications for the first call clearly shows that there is a strong demand for new support programs in tech journalism.

The conference began with a keynote by Prof. Dr. Annette Zimmermann from the University of Wisconsin–Madison, titled “The Prompt, The Press, and The Public Square: Keeping Big Tech in Check and Civil Society Strong.” Zimmermann raised the central question of why tech oligarchs seek to control public discourse—both in traditional and new media—so comprehensively. She described a solidifying “expertocracy,” in which citizens feel they lack the necessary expertise to participate in AI debates. This learned helplessness strengthens the power of the tech industry, whose experts often pursue their own interests.

Using the example of so-called “Friend-AI” systems—digital companions that simultaneously monitor users—Zimmermann illustrated how AI can influence our behavior. She emphasized that behind seemingly neutral statements or technologies, there are often hidden political or societal assumptions that shape our perception.

AI is therefore not only a technical issue but also a deeply political one that requires democratic oversight and public accountability. Zimmermann stressed the importance of critical journalism in making these developments visible and strengthening civil society.

Tech Journalism as a Democratic Practice

Following the keynote, Sidney Fussell, investigative journalist and filmmaker from California, and Maria Exner discussed the responsibility of explaining complex technologies in ways that make their societal consequences visible. Fussell described how he moved from communication studies into tech journalism and why he always follows the principle: “We report not on machines, but on the people behind the machines.” For him, good tech reporting means testing ideas against real-world experience and showing how technology distributes, amplifies, or conceals power.

Using examples such as Flint, Michigan, where algorithms helped determine access to clean water, or the use of AI tools by the police to prevent mass shootings, Fussell illustrated how deeply technical systems already affect societal and democratic structures.

How AI is Changing Public Life and Journalistic Practice

Prof. Dr. Wiebke Loosen (University of Hamburg) and Simon Berlin (Süddeutsche Zeitung, Social Media Watchblog) discussed how digitalization and automation are changing societal communication and what this means for journalism. Loosen highlighted that AI debates often have blind spots: journalists report on opportunities and risks but rarely examine the underlying data or assumptions of the systems.

A central role is played by the metaphors used to describe AI, such as “tool” or even “therapist.” These images shape how society understands technology and what expectations or fears are associated with it. Loosen advocated for analyzing these metaphors from a social-scientific perspective to understand how we bring AI “into existence” through language.

The talk illustrated how AI is changing everyday journalistic work: workflows, roles, and editorial responsibilities are shifting. The often-cited “human in the loop” mostly adds additional review and control routines in many newsrooms. At the same time, there is growing pressure to deploy AI before clear rules or ethical guidelines exist.

Berlin and Loosen discussed both opportunities, such as making complex topics more understandable, alternative image descriptions, or personalized information services, as well as risks: lack of transparency, unclear responsibilities, and automated content mimicking journalistic formats without professional verification. Journalism is thus faced with the task of repositioning itself while critically educating about AI.

Best Practices: AI Between Support, Self-Reflection, and Critical Guidance

Next, three practical examples were presented, demonstrating the ethical, social, and practical questions that arise from working with AI.

Dr. Elena Heber from HelloBetter presented the AI companion “Ello,” which provides low-threshold, accessible mental health support. The system is based on a clear ethical framework that protects data privacy.

Jette Miller, founder of the app The Pearl, demonstrated how AI can be used for self-reflection and analyzing unconscious patterns. The app operates through language and metaphors and uses a deliberately limited dataset.

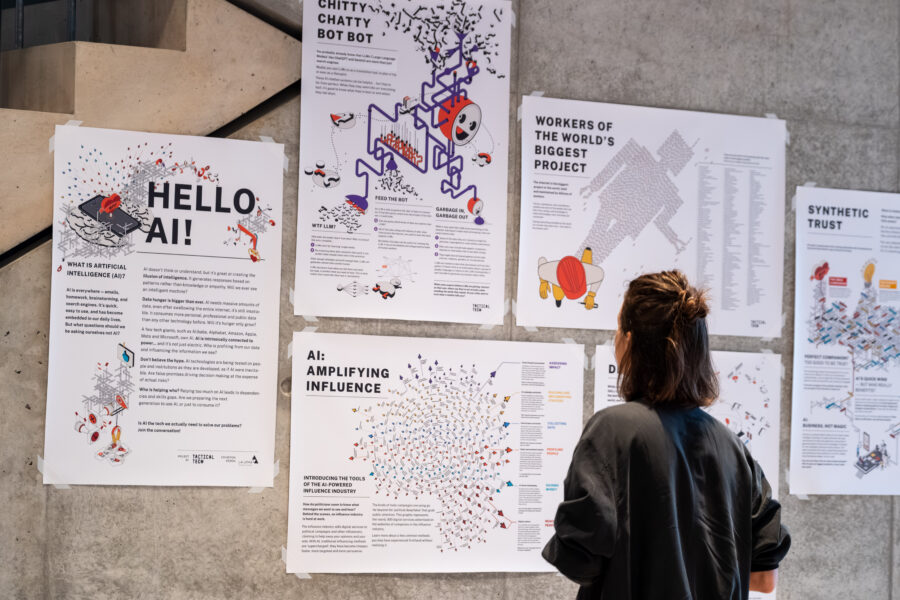

Stephanie Hankey, founder of the NGO Tactical Tech, showed how she advises NGOs on responsible AI use and supports journalists in both editorial practice and critical reporting.

Ethical Questions of Human-Machine Interaction

The conference concluded with a conversation between Prof. Dr. Aimee van Wynsberghe (University of Bonn) and Lisa Hegemann (DIE ZEIT) on “Can Machines Care? The Ethics of Human-Machine Interaction.” Van Wynsberghe explained the ethical challenges AI poses in learning processes, applications, and underlying methodologies.

Questions of responsibility and liability were central: Who is responsible when AI makes mistakes? Who is liable for harm in highly sensitive areas such as health, mobility, or public safety? Van Wynsberghe warned against shifting responsibility to “the technology,” emphasizing that it lies with the people who develop, deploy, or regulate the systems.

Another focus was the anthropomorphization of machines. Especially in care, therapy, or social robotics, humans attribute qualities to AI systems that they do not possess. This leads to asymmetric relationships, emotional projections, and complex ethical dilemmas. Care robots, self-driving cars, or AI in policing show that many debates are less technological than societal. Often the problem lies not in the machine but in missing human resources or political priorities. Van Wynsberghe stressed: machines cannot take responsibility—humans behind them carry the duty of care.

The premiere of “Reporting the Machines” demonstrated that AI debates go far beyond technical questions. They concern public life, democracy, justice, and how we define societal responsibility. The interdisciplinary exchange made clear how urgently independent, competent, and diverse tech journalism is needed and why programs like the new Publix Tech Journalism Fellowship are emerging right now.